Unpacking Your Terabyte: The Sustainability Story Behind Cloud Storage

Why This Blog?

Sustainability is no longer an optional corporate virtue - it’s becoming a technical responsibility.

Every byte stored, every VM spun up, and every redundant replica sitting idle consumes real energy - and that energy has a carbon cost.

While hyperscalers like AWS, Azure, and Google Cloud have pledged to go fully carbon-neutral with their own dashboards and tools:

- AWS: Sustainability, Carbon footprint

- GCP: Sustainability, Carbon footprint

- Azure: Sustainability, Carbon footprint

…the truth is that most of a cloud’s footprint still depends on how efficiently customers use the resources they provision.

That’s the motivation behind this blog - to unpack what really happens under the hood when you allocate a terabyte in the cloud, and how smarter storage management can cut costs and carbon.

Have You Ever Wondered What Really Happens When You Create a Cloud Disk?

You open AWS, click “Create EBS Volume”, or spin up a managed disk on Azure or GCP - and in a few seconds, your 1 TB of block storage appears.

It feels instant and effortless.

But behind that single click lies a vast, highly orchestrated, energy-hungry, and meticulously engineered web of servers, switches, and spinning or flashing disks that power your storage.

When you provision a terabyte in the cloud, you’re not reserving a single disk - you’re claiming logical capacity (virtualized storage) that sits atop a distributed network of physical drives managed by your cloud provider.

The Invisible Machinery of Cloud Storage

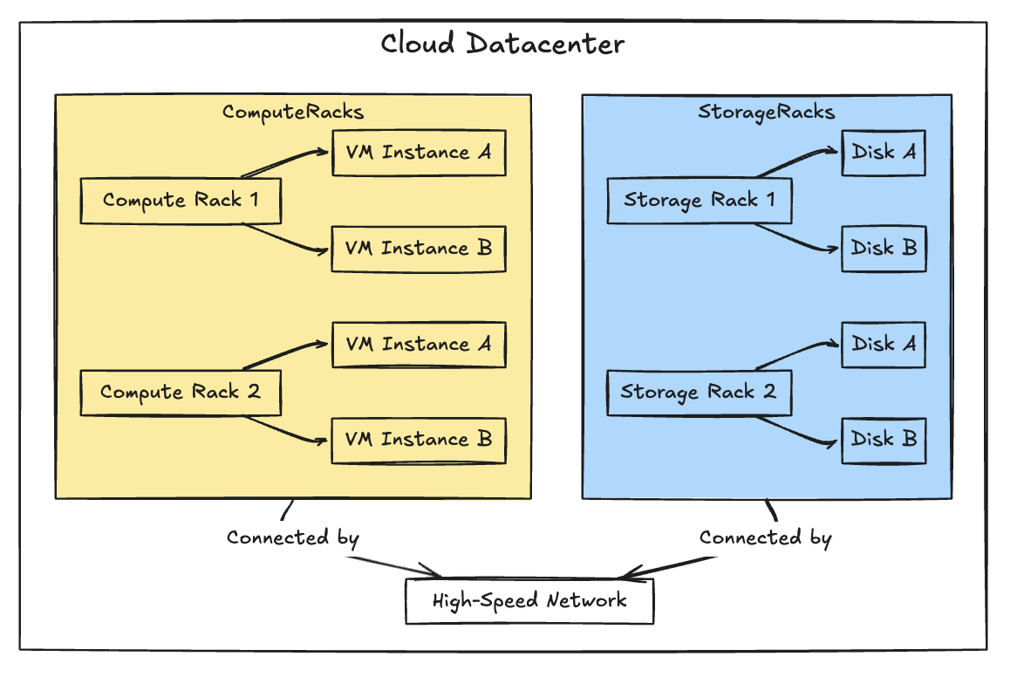

In a hyper-scale data center, thousands of servers are organized into racks, each with:

- Its own power supply,

- A Top-of-Rack (ToR) switch connecting to the underlay network, and

- Dedicated connections to high-speed fabrics that link compute and storage.

Typically, compute and storage are decoupled:

- Your EC2 or VM runs on one cluster.

- Your block storage (EBS, Azure Managed Disks, or GCP Persistent Disks) resides on another.

- They communicate over high-speed, low-latency networks.

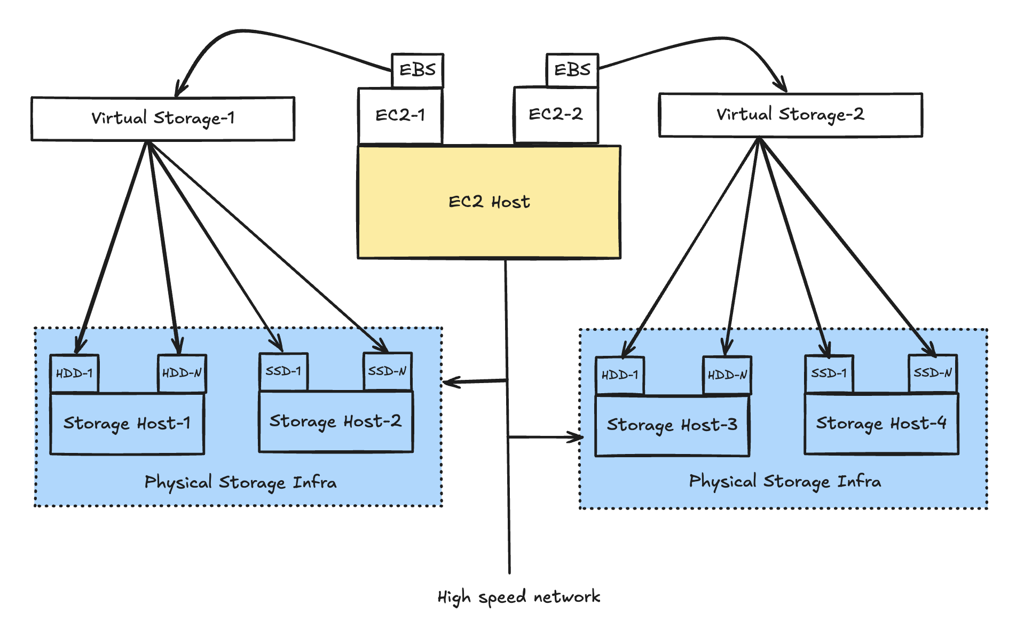

When your VM attaches a 1 TB EBS volume, it behaves as if it has a dedicated physical drive.

In reality, that “disk” is:

- Backed by multiple physical disks

- Spread across racks and servers

- Replicated for durability

- Connected through redundant power and networking

What Happens When You Write Data

When your application writes data:

- It’s split into blocks (typically 256 KB–1 MB).

- Each block is replicated across multiple storage nodes for durability.

- A write completes only when data exists in multiple places.

That 1 TB logical volume may therefore translate to 2–3 TB of physical storage, once replication and metadata overheads are factored in.

To optimize, cloud providers employ techniques like:

- Thin provisioning: physical space is allocated only when data is written

- Deduplication: identical data blocks are stored once, and

- Compression: fewer physical bytes per logical byte

But clouds still plan for your full allocation — they must guarantee you can write that 1 TB when you need it. So, your unused space still contributes to capacity planning and provisioning pressure - i.e., someone somewhere had to install extra drives for those “just in case” terabytes.

You can think of it like airplane seats:

Even if some passengers (data) don’t show up, the airline (cloud provider) still fueled the plane for full capacity.

From our own experience of managing block storage for enterprises, over-provisioned volumes remain a constant - most customers over-allocate by 50–70%, paying for (and indirectly powering) capacity that isn’t being used.

The Real-World Footprint of a Terabyte

All this digital abstraction rests on physical SSDs and HDDs, each with a tangible energy and carbon footprint - from manufacture to transport, operation, and disposal.

When enterprises over-provision storage, the carbon footprint associated with manufacturing and operating that unused capacity is effectively wasted. For e.g., every 1 TB of SSD storage that sits idle still represents ~375 kg CO₂e - carbon emissions that could have been avoided with smarter provisioning along with some cloud cost savings for the enterprise.

📚 References:

What Exactly Is CO₂e?

CO₂e (Carbon Dioxide Equivalent) is a fancy way of saying:

“Let’s express all greenhouse gases (CO₂, CH₄, N₂O, etc.) as if they were plain old CO₂.”

So when you see, 1 kg CO₂e, it literally means the warming effect of 1 kg of carbon dioxide emitted.

For context:

Data Centers: The Energy Giants of the Digital Age

Globally, data centers consume ~1.5–2% of total electricity, about 460–540 TWh per year (2025), projected to exceed 1,000 TWh by 2030 due to AI and data-intensive workloads.

For perspective, the world’s total electricity use is around 27,000 TWh per year — meaning data centers already consume as much power as some developed nations combined.

📚 References:

How Green Is Cloud Energy?

The major cloud providers publish sustainability reports indicating growing use of renewable power:

- AWS reached > 80% renewable energy as of 2024 and targets full renewables by 2025.

- GCP reports > 90% carbon-free energy (CFE) coverage globally.

- Azure aims for 100% renewable energy by 2025.

However, across all data centers globally, ~60–65% of electricity is still non-renewable, primarily from coal, natural gas, and oil.

📚 Reference:

This non-green share highlights the significance of efficiency - not merely the replacement of renewables.

Why Smart Storage Management Matters

Leaving terabytes unutilized is more than a cost issue - it’s a carbon multiplier.

Every idle terabyte implies power for cooling, redundancy, and backup on disks that may never be used.

That’s where Lucidity steps in.

🔍 Lucidity Assessment

- Provides a clear view of your storage footprint across AWS, GCP, and Azure.

- Provides insights into root and data partitions across Windows and Linux

- Identifies over-provisioned volumes, underutilized resources, and optimization opportunities - the insight you need before taking action.

🔧 Lucidity AutoScaler

- Optimizes block storage utilization across AWS, GCP, and Azure by automatically resizing volumes based on actual usage.

- No downtime, no data loss - just smarter capacity management.

- By reducing over-provisioning, AutoScaler directly helps cut both cloud bills and carbon footprints.

⚙️ Lucidity Lumen

- Performs smart tiering and deep analytics on your storage stack.

- Identifies unused, stale, or underutilized capacity, and can recommend or automate moves to cheaper, greener storage tiers - ensuring every byte has a purpose.

Together, AutoScaler + Lumen enable organizations to move from passive monitoring to active sustainability through optimization.

To Conclude…

In a world where electricity is becoming an increasingly scarce resource, every kilowatt saved counts. Data centers are the backbone of our digital lives.

As we optimize our digital habits, such as managing cloud storage efficiently, we contribute to a more sustainable future. Each small action, like reducing unnecessary data storage, can help alleviate the strain on our power grids and ensure that electricity is available for essential services.

So, let's continue to be mindful of our digital footprints and play a part in conserving energy for a better tomorrow.

On a lighter note, every time you right-size your cloud storage,

Somewhere a sustainability officer smiles.

A data center fan spins a little slower.

And an SSD breathes easier. 😄

Or as we like to say:

“May your disks be full (of data).”

-------------------------------------------------------------------------------------

Data Disclaimer

All CO₂e and energy figures are based on publicly available studies and vendor sustainability reports. No independent verification or measurement has been conducted. Actual numbers may vary based on region, data-center efficiency, and hardware generation.

AI Assistance Disclaimer

The ideas, perspective, and analysis in this blog are entirely our own. Assistance from Large Language Models (LLMs) was used for formatting and improving readability.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)